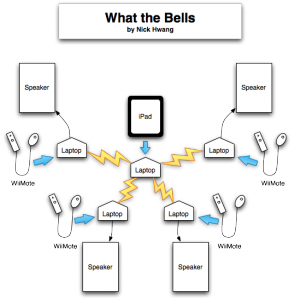

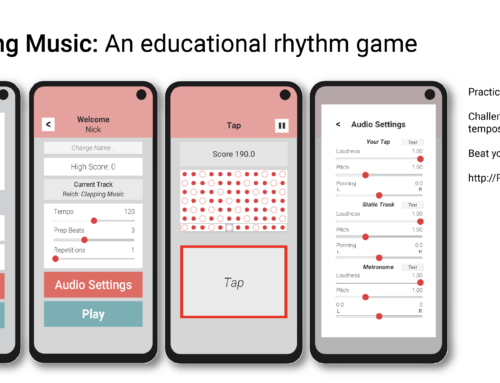

‘What the Bells’ is a musical piece that involves Wiimote, Laptop, and (recently added) iPhone/iPad.

‘What the Bells’ involves a 4 Bell players, each with Wii-Motes and laptop running a client Max patch. A central laptop sends performer instructions via OSC. Global parameters such as timbre changes and delay, controlled by iPhone or iPad, are sent to the performers’ laptops.

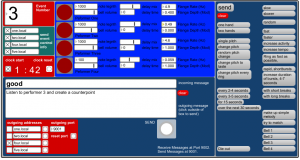

Main Laptop Controller Interface

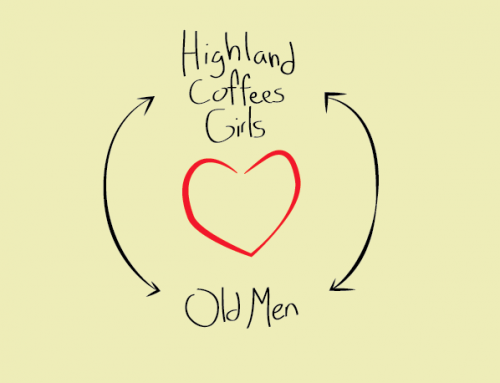

The piece was an exercise on many levels.

1. Create a piece for Laptop Ensemble.

2. Use controllers that convey an audience-accessible associative real-world physicality. (Something that an audience could say ‘oh, yeah- she’s swinging the wii-mote like you would a handbell!)

3. Use OSC as a means of communication. Not just the exchange of data, but also performance instruction.

4. Create a robust Max/MSP patch with an elegant user interface.

5. Reduce Setup time.

(6. Visualization of Messages and Control Data is in the works.)

Each component of the piece was borne for this particular piece, but as I was a developing them, I saw the need to make everything modular. Now, each part can be recycled for future use. The messaging system, the wii-control interface for Max/MSP, the iPhone/iPad interface for Max/MSP, – and many components I wrote and did not implement in this piece: Event/Preset Control, Uni/Omni-Directional Control flexibility (the client Laptops can actually control each other), debugging controls, dynamic interface transformations, and a few others.

Each part of my Max code that I saw as modular and relevant for future use, I created as sub-patch/personal maxobjects– reducing the need to re-write or even copy-and-paste basic tasks.

The piece has been performed twice this semester and will be performed 8pm, April 14, at LSU’s Shaver Theatre in the Music and Dramatic Arts building during the Festival of Contemporary Music.